Closing the gap between an AI-generated and a real shark

There was a time when sharks only lived in the ocean. Today, with the rise of AI capabilities they can be generated in seconds. Sharp. Detailed. Feared. But bringing them into experiences is not as simple as typing a prompt. When it comes to integrating generative AI into products, restrictions come into scene. This article explores the use of AI-generated images in real products, with a special emphasis on the challenges, variables, and possibilities involved. The examples focus on applications where real-time image generation is essential to provide personalized experiences for each user.

.png)

Shark Skeleton

Technology

Before diving into the variables that affect the output, here is a summary of some key methodologies behind image generation, each with a different approach, advantages and limitations:

Generative Adversarial Networks (GAN)

Based on two models, a generator that creates fake samples from an input, and a discriminator that decides whether a sample is real, from the training data, or generated, fake. The generator improves its outputs to fool the discriminator into classifying them as real. Based on each result, the generator and discriminator improve their behaviour.

Autoregressive models

These models generate images sequentially, where each part, such as a pixel or a region, depends on the previous ones. This allows for detailed outputs but often requires more processing power for complex images.

Diffusion models

These models are trained by adding noise to data and then learning how to reverse that process, reconstructing data from noise. When using a diffusion model from a text prompt, it is converted into an embedding, a mathematical representation of it, that guides the process of clearing the noise, step by step, that has learned in the training phase.

Before diving into the variables that affect the output, here is a summary of some key methodologies behind image generation, each with a different approach, advantages and limitations:

Generative Adversarial Networks (GAN)

Based on two models, a generator that creates fake samples from an input, and a discriminator that decides whether a sample is real, from the training data, or generated, fake. The generator improves its outputs to fool the discriminator into classifying them as real. Based on each result, the generator and discriminator improve their behaviour.

Autoregressive models

These models generate images sequentially, where each part, such as a pixel or a region, depends on the previous ones. This allows for detailed outputs but often requires more processing power for complex images.

Diffusion models

These models are trained by adding noise to data and then learning how to reverse that process, reconstructing data from noise. When using a diffusion model from a text prompt, it is converted into an embedding, a mathematical representation of it, that guides the process of clearing the noise, step by step, that has learned in the training phase.

In the early stages, working with text was the most accessible and effective way to interact with generative models. However, advancements in capabilities like multimodality have opened new possibilities, allowing models to understand and generate using not just text, but also images, audio, and more. Models are now capable of using all senses at once.

It is important to know the technology behind the models we use, as well as their specific variables and characteristics, to understand why certain behaviours occur: hallucinations, higher or lower generation times, higher prices, or more or less accurate results, among others. But also, to know which variables we have the possibility to work with and variate once a model has been chosen.

Variables

A detailed analysis of the variables can determine the difference when building a consistent product with a strong user experience.

It is important to know the technology behind the models we use, as well as their specific variables and characteristics, to understand why certain behaviours occur: hallucinations, higher or lower generation times, higher prices, or more or less accurate results, among others. But also, to know which variables we have the possibility to work with and variate once a model has been chosen.

Variables

A detailed analysis of the variables can determine the difference when building a consistent product with a strong user experience.

.png)

Shark tail

Time

Models currently spend at least some seconds to generate images. Less time means more money per image or less quality. Sometimes it will be crucial for real time experiences but others you can afford a longer image generation reducing users waiting perception. Here are some advices:

. Use animations or change focus to other elements while users are waiting.

. Do not oversaturate loading states if users will see them often.

. Show counters or changing texts that transmit that the system is working.

. If the generation process is divided into different steps, use it to transmit real progress.

Format

The square format is generally cheaper than horizontal or vertical ones because a smaller area must be generated. When considering millions of uses, this difference becomes significant. Evaluate whether your product truly requires a specific format or if the API provides different resolutions. For example, autoregressive models will need to generate more zones while diffusion ones require wider passes, affecting both parameters and computational resources.

Models currently spend at least some seconds to generate images. Less time means more money per image or less quality. Sometimes it will be crucial for real time experiences but others you can afford a longer image generation reducing users waiting perception. Here are some advices:

. Use animations or change focus to other elements while users are waiting.

. Do not oversaturate loading states if users will see them often.

. Show counters or changing texts that transmit that the system is working.

. If the generation process is divided into different steps, use it to transmit real progress.

Format

The square format is generally cheaper than horizontal or vertical ones because a smaller area must be generated. When considering millions of uses, this difference becomes significant. Evaluate whether your product truly requires a specific format or if the API provides different resolutions. For example, autoregressive models will need to generate more zones while diffusion ones require wider passes, affecting both parameters and computational resources.

.png)

Shark column

Use user’s

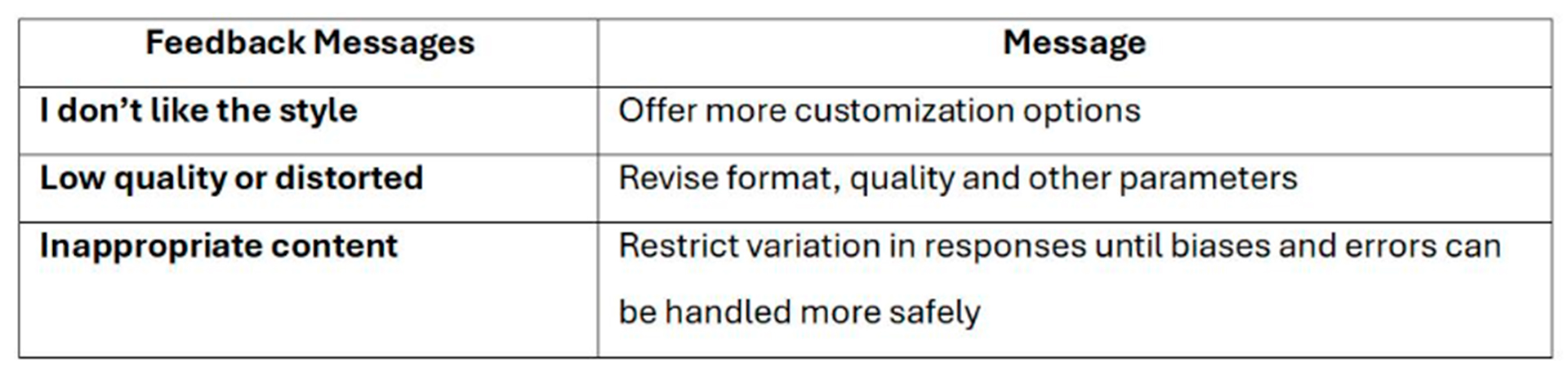

feedback and metrics to understand people perception, if they are seeing it as an innovative feature or maybe it is generating rejection. This will also help you detect biases, inappropriate content, or where your model needs improvements. Here are some examples of possible learnings.

Table examples

Money

We cannot speak about real products without mentioning the available budget. Image generation is more expensive than text generation. Implement daily usage limits per user

Offer paid plans.

Implement limits to stop fraudulent uses

Adaptability

It is important to understand that all these variables can variate diary, and what determines the best combination today, do not determine the most optimal one tomorrow. Make a scalable system where you can adapt variables to current usage and restrictions, and work on hiding this technical part for users by giving it a natural role in your app.

Predictability

Generative AI can be unpredictable, which sometimes is a disadvantage because we do not have complete control over what it produces. At the same time, that unpredictability is also one of its biggest strengths, since it allows the creation of unique content. LLMs may sometimes hallucinate or generate rare outputs, but we can influence the results by adjusting parameters like temperature. Lower values make the responses more focused and consistent, while higher values make them more creative. Other reasons of hallucinations are caused for example by gaps or biases in data but others by ambiguity in the prompt. Providing clearer and more structured input also helps guide the model generate more accurate results.

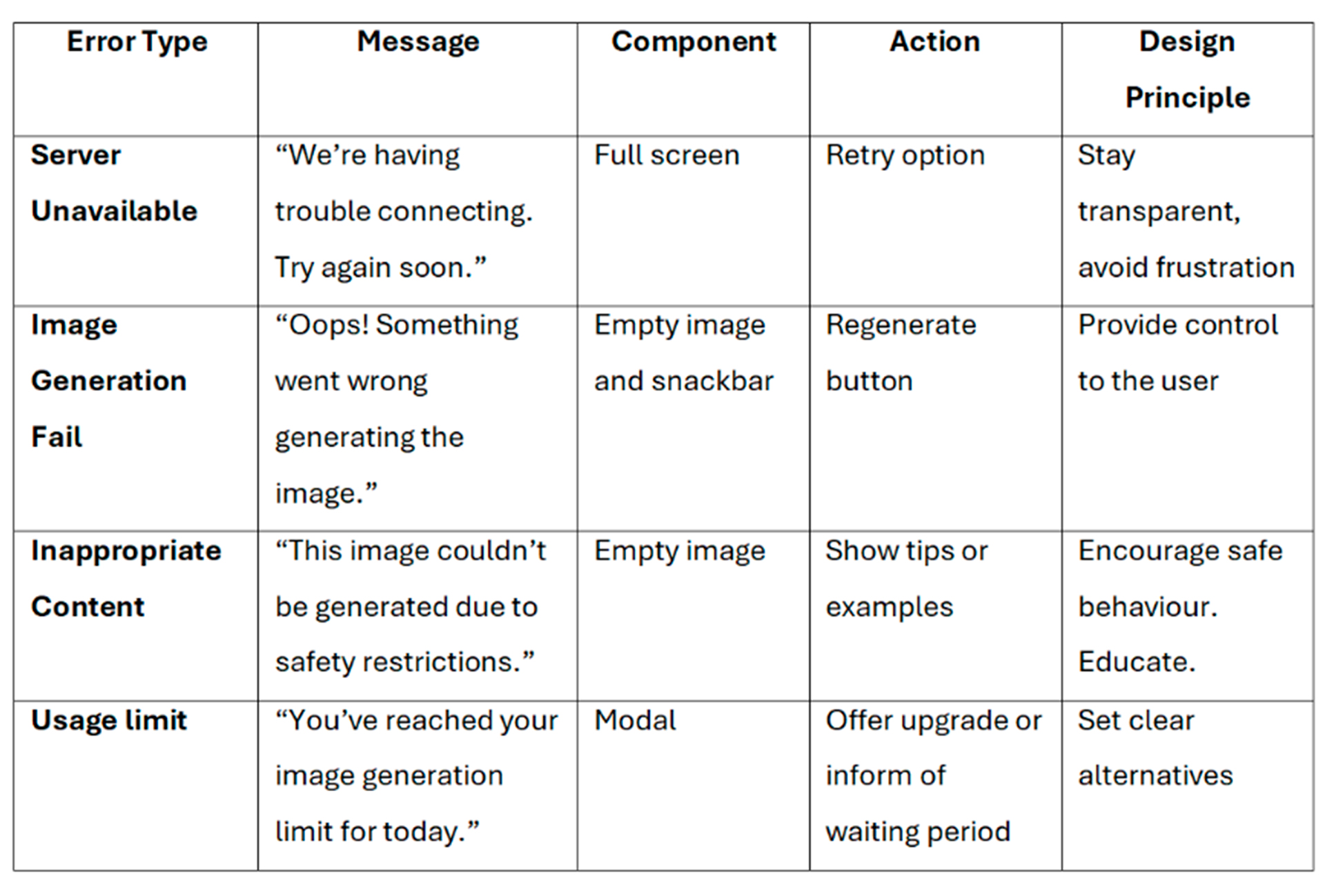

Errors

Technology can fail. Servers may reach usage limits, or image generation processes may encounter issues. It’s essential to inform users when such errors occur, but more importantly, provide actions to do in those cases. Here are some examples that show how each error should be designed differently depending on the situation.

We cannot speak about real products without mentioning the available budget. Image generation is more expensive than text generation. Implement daily usage limits per user

Offer paid plans.

Implement limits to stop fraudulent uses

const MAX_DAILY_USES = 10; Adaptability

It is important to understand that all these variables can variate diary, and what determines the best combination today, do not determine the most optimal one tomorrow. Make a scalable system where you can adapt variables to current usage and restrictions, and work on hiding this technical part for users by giving it a natural role in your app.

Predictability

Generative AI can be unpredictable, which sometimes is a disadvantage because we do not have complete control over what it produces. At the same time, that unpredictability is also one of its biggest strengths, since it allows the creation of unique content. LLMs may sometimes hallucinate or generate rare outputs, but we can influence the results by adjusting parameters like temperature. Lower values make the responses more focused and consistent, while higher values make them more creative. Other reasons of hallucinations are caused for example by gaps or biases in data but others by ambiguity in the prompt. Providing clearer and more structured input also helps guide the model generate more accurate results.

Errors

Technology can fail. Servers may reach usage limits, or image generation processes may encounter issues. It’s essential to inform users when such errors occur, but more importantly, provide actions to do in those cases. Here are some examples that show how each error should be designed differently depending on the situation.

Table errors

Ethic

Be careful with the unreal perception that images can cause of a product, person or object. Even though legislation is being created on the fly, maintain the logical values of transparency. Add captions that encourage users to verify information to bring a more informed and responsible image generation or explain it in detail if needed.

While many new products emerge adding generative AI features, others are working on detecting fake content, including for example identity theft. Google SynthID is an example of tool focused on watermarking and identifying AI generated content aiming to promote transparency in product design.

Be careful with the unreal perception that images can cause of a product, person or object. Even though legislation is being created on the fly, maintain the logical values of transparency. Add captions that encourage users to verify information to bring a more informed and responsible image generation or explain it in detail if needed.

While many new products emerge adding generative AI features, others are working on detecting fake content, including for example identity theft. Google SynthID is an example of tool focused on watermarking and identifying AI generated content aiming to promote transparency in product design.

.png)

Shark Jaw

Minimize biases

It’s essential to test your product in a variety of ways. Users enjoy experimenting and often use products in creative and unexpected manners. Models are being refined in that sense and many of them facilitate to access safety settings to ensure more careful and responsible responses. Many companies are recognizing the importance of bias control and are increasingly publishing their principles and guidelines to promote transparency and ethical AI use. Additionally, is on our hand to test and adapt prompts to use cases bringing more inclusive designs that meet the needs of all users. Control and check corner cases where the input for image generation is out of the topic or goes around sensitive content and design feedback for those cases.

Example of safetySettings when doing a request to Gemini API. April 2025

Loyalty is being one of the most controversial topics nowadays. It seems easy to replicate some authors style or people appearance. The situation is still uncertain, and it is essential to avoid crossing legal boundaries while relying on common sense and logic. Drawing styles, illustrations, and photographs are crossing legal grey areas, and more regulation will gradually be added to generative AI. Differenciate between personal uses, prototypes or production.

Sustainability

Optimize resources usage from design phases. Do users need images generated in real time or some of them can be preloaded? Can we use a lightweight format? Do we really need so many images? Find a balance that works for your experience.

Conclusion

When image generation was in its initial stages of development, we began developing prototypes and exploring their applications, especially in real time. Initially, image generation was quite arbitrary, but every day, new technology improvements enhanced its capabilities. For improvised demos, I often generated images of sharks because I knew it would be a controlled topic for me that would make satisfactory results. Each day I saw a better result of my sharks. That’s why I decided to title this article “Closing the gap between an AI-generated and a real shark” It serves as a reminder of how rapidly technology evolves and how adaptable design must be to new variables, tools or models.

It’s essential to test your product in a variety of ways. Users enjoy experimenting and often use products in creative and unexpected manners. Models are being refined in that sense and many of them facilitate to access safety settings to ensure more careful and responsible responses. Many companies are recognizing the importance of bias control and are increasingly publishing their principles and guidelines to promote transparency and ethical AI use. Additionally, is on our hand to test and adapt prompts to use cases bringing more inclusive designs that meet the needs of all users. Control and check corner cases where the input for image generation is out of the topic or goes around sensitive content and design feedback for those cases.

safetySettings: [

{

category: "HARM_CATEGORY_HARASSMENT",

threshold: "BLOCK_MEDIUM_AND_ABOVE"

},

{

category: "HARM_CATEGORY_HATE_SPEECH",

threshold: "BLOCK_MEDIUM_AND_ABOVE"

},

{

category: "HARM_CATEGORY_SEXUALLY_EXPLICIT",

threshold: "BLOCK_MEDIUM_AND_ABOVE"

},

{

category: "HARM_CATEGORY_DANGEROUS_CONTENT",

threshold: "BLOCK_MEDIUM_AND_ABOVE"

}

] Example of safetySettings when doing a request to Gemini API. April 2025

Loyalty is being one of the most controversial topics nowadays. It seems easy to replicate some authors style or people appearance. The situation is still uncertain, and it is essential to avoid crossing legal boundaries while relying on common sense and logic. Drawing styles, illustrations, and photographs are crossing legal grey areas, and more regulation will gradually be added to generative AI. Differenciate between personal uses, prototypes or production.

Sustainability

Optimize resources usage from design phases. Do users need images generated in real time or some of them can be preloaded? Can we use a lightweight format? Do we really need so many images? Find a balance that works for your experience.

Conclusion

When image generation was in its initial stages of development, we began developing prototypes and exploring their applications, especially in real time. Initially, image generation was quite arbitrary, but every day, new technology improvements enhanced its capabilities. For improvised demos, I often generated images of sharks because I knew it would be a controlled topic for me that would make satisfactory results. Each day I saw a better result of my sharks. That’s why I decided to title this article “Closing the gap between an AI-generated and a real shark” It serves as a reminder of how rapidly technology evolves and how adaptable design must be to new variables, tools or models.

.png)

The images in this article were generated using the Gemini 2.0 Flash experimental model in April 2025.