You should deploy more often

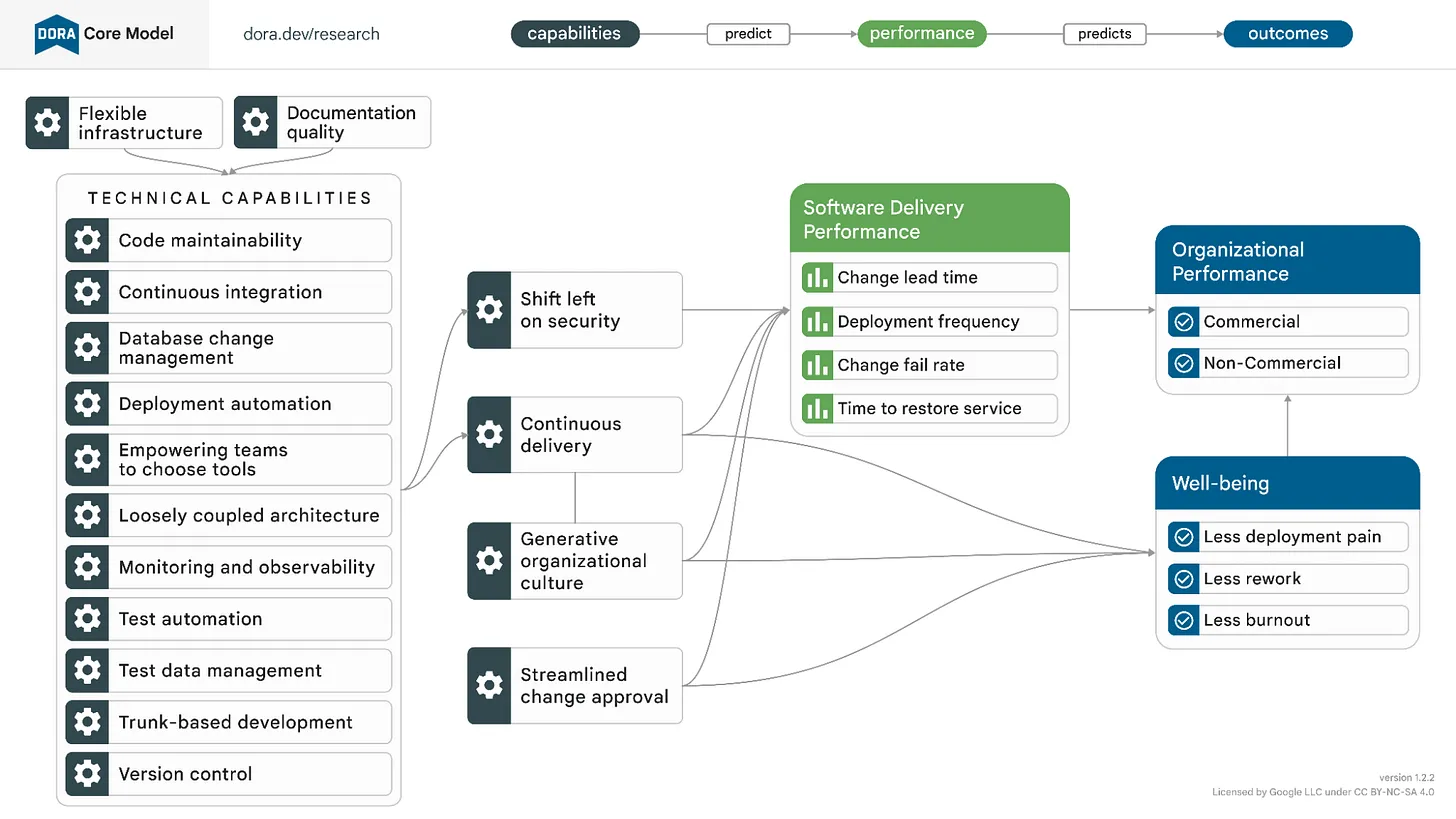

DevOps Research and Assessment (

DORA) is a research program, currently run by Google, that intends to measure and understand software delivery and operations performance. Since 2014 DORA conducts a yearly survey among software professionals in different organizations and then publishes their

Accelerate State of DevOps Report. These reports not only show the results of the survey but also expose the factors that affect the performance and the wellbeing of the team, and the practices that are recommended or discouraged.

Probably the most prominent conclusion of the DORA research is that the software delivery performance of an organization can be predicted by four very simple metrics:

Probably the most prominent conclusion of the DORA research is that the software delivery performance of an organization can be predicted by four very simple metrics:

- Deployment Frequency: How often is the software deployed to production?

- Change Lead Time: How long does it take for a code change to reach production?

- Change fail rate: When a deployment occurs, how likely is it that it fails or is rolled back?

- Time to restore service: When a failure in production is detected, how long does it take to be solved in production?

DORA´s Core Model

We can roughly say two traits of the product are measured here: The first two metrics assess

the speed at which the software is delivered. The last two deal with

the stability of the software delivered.

(Note: Starting 2021, DORA considers reliability as a fifth metric which measures operational performance)

Intuitively, these two things seem to be in opposition: When we are afraid to make mistakes, our instinct is to slow down. Translated to the delivery of software, this would mean that deploying to production less often leads to fewer mistakes. It is a common situation that a software increment with poor quality is delayed until the offending issues are resolved.

However, the discoveries of the DORA team disprove this intuition. In the last report, teams are clustered into three groups with Low, Medium and High performance (in previous reports there was a fourth group called Elite). The low performance group deploys between once a month and twice a year, and at the same time it fails around half of the time. The high performance group deploys up to several times a day with a failure rate of 15% or less.

That is, speed and stability are directly correlated, not inversely. High functioning teams are both fast and reliable, while poor performers are slower to deliver value and also more prone to making mistakes.

On the other hand, teams that deliver slowly but surely, or conversely, quick but with errors, are the exception and not the rule.

How can this be the case?

The most likely reason is that a faster deployment pace requires of the software team several good practices that benefit both speed and stability.

(Note: Starting 2021, DORA considers reliability as a fifth metric which measures operational performance)

Intuitively, these two things seem to be in opposition: When we are afraid to make mistakes, our instinct is to slow down. Translated to the delivery of software, this would mean that deploying to production less often leads to fewer mistakes. It is a common situation that a software increment with poor quality is delayed until the offending issues are resolved.

However, the discoveries of the DORA team disprove this intuition. In the last report, teams are clustered into three groups with Low, Medium and High performance (in previous reports there was a fourth group called Elite). The low performance group deploys between once a month and twice a year, and at the same time it fails around half of the time. The high performance group deploys up to several times a day with a failure rate of 15% or less.

That is, speed and stability are directly correlated, not inversely. High functioning teams are both fast and reliable, while poor performers are slower to deliver value and also more prone to making mistakes.

On the other hand, teams that deliver slowly but surely, or conversely, quick but with errors, are the exception and not the rule.

How can this be the case?

The most likely reason is that a faster deployment pace requires of the software team several good practices that benefit both speed and stability.

You should keep on improving

As was mentioned above, The DORA report not only shows the results of the surveys, but also provides insights and advice. One of these insights is that teams that adapt and improve continuously have a better performance. In this case the empirical evidence supports the intuitive idea that continuous improvement is the path to better results.

Once you have a continuous improvement mentality, you should decide which goal you pursue, which metric are you going to measure to assess your improvement. The obvious choice will probably be to go for less bugs in production, and more features delivered each release. We propose that, even if the final goal is still the same, the team should focus instead in increasing the deployment speed. This will surely lead to the other goals (given that, as we saw before, speed and stability are directly correlated), but it will also provide a clearer path to achieve it.

Once you have a continuous improvement mentality, you should decide which goal you pursue, which metric are you going to measure to assess your improvement. The obvious choice will probably be to go for less bugs in production, and more features delivered each release. We propose that, even if the final goal is still the same, the team should focus instead in increasing the deployment speed. This will surely lead to the other goals (given that, as we saw before, speed and stability are directly correlated), but it will also provide a clearer path to achieve it.

Whatever your situation…

There are several factors that can limit how often a team can deploy, beyond its ability to provide the code:

On the other hand, your team probably has not reached yet the absolute speed limit your situation allows in every circumstance. For example: You may be able to deploy changes to an iOS App in two days, which is usually the time needed for the eview process. But, when an issue comes back from the review, do you need another couple of days? Another situation: Maybe your client will accept changes only in two-month releases. But when a serious bug appears in production, how much time do you require to have a fix ready to deploy?

- Platform limitations: The platform that is going to run the software will determine how often it can be deployed. Some examples: A web application may be modified as often as desired, and users can immediately see the change. An updated version of an iOS app requires a review by Apple, which takes days. Updating the software embedded in a car may require a visit to the garage. The code in a credit card will never be changed.

- Business or organizational boundaries: A company or Business Unit may produce the software that is then delivered to a different one to be installed and managed in their premises. Therefore the team creating the software loses control of the process.

- Lack of confidence: A history of previous failed deployments and costly bugs in production can make all parties afraid of changes, making deployments in production less frequent. This triggers a vicious cycle where longer release times make bigger and more complex changes to be released, which increases the probability of bugs, and so on.

On the other hand, your team probably has not reached yet the absolute speed limit your situation allows in every circumstance. For example: You may be able to deploy changes to an iOS App in two days, which is usually the time needed for the eview process. But, when an issue comes back from the review, do you need another couple of days? Another situation: Maybe your client will accept changes only in two-month releases. But when a serious bug appears in production, how much time do you require to have a fix ready to deploy?

There is a way

We admitted above that what most teams want to improve are the number of features delivered, and the quality of the delivery (in number of bugs). But going for those directly means a passive, reactive stance. To reduce the bugs, you should have paid more attention, tested more exhaustively, or thought of this or that unintended consequence. To deliver more you should have coded faster. It is hard to have a plan to improve the situation from this point of view.

The other way to think about this is that the goal on the long run should be to do as well as the high performing teams. These teams are capable of several deployments per day, so we should advance towards that level of efficiency.

Deploying faster does not mean simply setting a faster deployment schedule and following it no matter what. That will no doubt end badly. Rather, we should analyze our process, detect the bottlenecks, and remove them iteratively, so that the team naturally feels more confident and comfortable increasing the speed.

These bottlenecks will suggest the next steps that need to be taken to allow the acceleration. The following is an enumeration, undoubtedly incomplete, of situations a team may be in, and techniques that may be used to solve them. The exact definition of these practices is out of the scope of this writing, but the hope is that they spark the curiosity of the reader.

We have not mentioned the much popular practices of CI/CD, which can be defined as follows:

CI: Continuous integration. The practice of integrating code in the main branch of the repo at least once a day, each commit triggering a build of the code and a suite of automatic tests.

CD: This may mean Continuous Deployment or Continuous Delivery (which actually is mentioned as one of the recommended practices), which are not the same thing. Continuous Deployment means that each commit is automatically deployed to production without manual intervention. Continuous Delivery is more relaxed and means that the code is at all times ready to be deployed on demand.

These two are more wide-ranging practices, which require many of the techniques mentioned before to work (automatic tests, automatic deployment pipeline, short lived branches), so they are not as well suited to a small-steps approach. However, they may be considered as an intermediate milestone or a more general requisite to reach faster deployments.

Also, we failed to mention many other basic good practices that developers need to follow even before considering the recommended techniques, which we consider already accepted practices in the current coding scene, and necessary for a better development experience in general as well as a faster deployment pace. Some of these are code versioning using tools such as git, multiple environments (development and production, for example), team-wide coding conventions, etc.

The other way to think about this is that the goal on the long run should be to do as well as the high performing teams. These teams are capable of several deployments per day, so we should advance towards that level of efficiency.

Deploying faster does not mean simply setting a faster deployment schedule and following it no matter what. That will no doubt end badly. Rather, we should analyze our process, detect the bottlenecks, and remove them iteratively, so that the team naturally feels more confident and comfortable increasing the speed.

These bottlenecks will suggest the next steps that need to be taken to allow the acceleration. The following is an enumeration, undoubtedly incomplete, of situations a team may be in, and techniques that may be used to solve them. The exact definition of these practices is out of the scope of this writing, but the hope is that they spark the curiosity of the reader.

- The user stories take too long to be completed: Making smaller User Stories. Slicing (dividing a big US into smaller chunks). Improving the architecture to make changes easier. Allow the deployment to production of code for features not yet finished (Branch by abstraction, Feature Toggling)

- The coders are often blocked waiting for reviews: Pair programming. Post-commit reviews.

- Many bugs appear in production and/or during the QA phase: More unit testing. Test Driven Development (TDD). Test automation in general. Tracing Bullets (minimal versions of a feature that touch all parts of the systems affected, so that all issues surface soon). Feature toggling (This allows practices like Dark launch, or canary launch, that make for safer release of new features, and also allows to turn off the features that are misbehaving, while the rest of the service continues to work).

- At the end of the period all features are integrated at the same time, causing lots of conflicts: Trunk-based development, or at least short-lived branches. Decoupling the merging of the code from the completion of a feature. Slicing. Pair programing and ensemble (a.k.a. Mob) programming.

- Product requirements change constantly: Feature Toggling. Slicing. Continuous Delivery (the head of the main branch is always ready to be deployed to production. See below).

- Bugs take too long to be resolved: Unit testing and Test Driven Development (TDD). Continuous Delivery.

- Deployments often end in failure: Deployment pipeline automation

- Deployments require a lot of extra work: Deployment pipeline automation. Automatic documentation.

We have not mentioned the much popular practices of CI/CD, which can be defined as follows:

CI: Continuous integration. The practice of integrating code in the main branch of the repo at least once a day, each commit triggering a build of the code and a suite of automatic tests.

CD: This may mean Continuous Deployment or Continuous Delivery (which actually is mentioned as one of the recommended practices), which are not the same thing. Continuous Deployment means that each commit is automatically deployed to production without manual intervention. Continuous Delivery is more relaxed and means that the code is at all times ready to be deployed on demand.

These two are more wide-ranging practices, which require many of the techniques mentioned before to work (automatic tests, automatic deployment pipeline, short lived branches), so they are not as well suited to a small-steps approach. However, they may be considered as an intermediate milestone or a more general requisite to reach faster deployments.

Also, we failed to mention many other basic good practices that developers need to follow even before considering the recommended techniques, which we consider already accepted practices in the current coding scene, and necessary for a better development experience in general as well as a faster deployment pace. Some of these are code versioning using tools such as git, multiple environments (development and production, for example), team-wide coding conventions, etc.

You should wait for no one

As a member of a development team, you may be convinced of all that has been said here, but still, you feel uncapable of convincing the team or the client to deploy faster. Or maybe, you happen to be in some of the situations mentioned above, that make faster releases impossible. Still, you are not helpless. You can turn it around and start with the techniques mentioned, to be ready to deploy faster when the opportunity arrives.

No matter your role in the team (developer, product, QA), there is always some technique you can learn, adopt, or help adopt. Also, you do not need to convince the whole team to start, you can always advance in this direction yourself, in the tasks that you take on. You do not need permission to do a better job.

The more of these techniques are in motion, the easier it is for the product to be deployed faster and the better idea it will look. Be patient, try to listen to the needs and problems of the other stakeholders, and then try to solve them. They surely will be happy to deliver value faster when they feel it is safe. And even before that happens, when an emergency arises, such as a hotfix or a request of a feature that “cannot be delayed”, you will see the rewards for your efforts.

No matter your role in the team (developer, product, QA), there is always some technique you can learn, adopt, or help adopt. Also, you do not need to convince the whole team to start, you can always advance in this direction yourself, in the tasks that you take on. You do not need permission to do a better job.

The more of these techniques are in motion, the easier it is for the product to be deployed faster and the better idea it will look. Be patient, try to listen to the needs and problems of the other stakeholders, and then try to solve them. They surely will be happy to deliver value faster when they feel it is safe. And even before that happens, when an emergency arises, such as a hotfix or a request of a feature that “cannot be delayed”, you will see the rewards for your efforts.

Conclusion

The empirical evidence suggests that speed of delivery and software stability are not in opposition to each other, but rather are directly correlated. With this observation in mind, we propose that, even if the goal of your team is to reduce the number of bugs and to deliver more features, it is a good idea to aim your efforts towards deploying more often instead. This decision will advise the adoption of practices and reveal issues that will help the team’s performance in many ways.

These practices are mostly technical and related to the adoption of genuine CI/CD, but benefit from the participation of all stakeholders in the team and even the organization. At the same time, these practices provide a clearer path for the improvement of any team, no matter the status of the software or the processes currently in place. Therefore, these practices will support every member of the team, empowering them to improve the product.

These practices are mostly technical and related to the adoption of genuine CI/CD, but benefit from the participation of all stakeholders in the team and even the organization. At the same time, these practices provide a clearer path for the improvement of any team, no matter the status of the software or the processes currently in place. Therefore, these practices will support every member of the team, empowering them to improve the product.