Unleashing AI Power in Your Pocket: Small Language Models with Google AI Edge

In today's AI-powered world, the ability to run advanced machine learning models directly on mobile devices is revolutionizing our relationship with technology. Gone are the days when deep AI capabilities required constant connection to remote servers. With Small Language Models (SLMs) and Google AI Edge technology, developers can bring the latest AI capabilities directly to Android devices. In this article, we explore how to leverage these technologies to create fast, private, and efficient AI experiences without relying on the cloud.

What Are Small Language Models?

Imagine having a miniaturized version of ChatGPT running entirely on your smartphone. That's what Small Language Models offer: compact language AI systems capable of operating within the limitations of mobile devices, yet powerful enough for sophisticated tasks.

Unlike much larger models like GPT-4 or Claude, which contain hundreds of billions of parameters, SLMs typically range from a few million to as many as 10 billion parameters. This reduced size makes them ideal candidates for running on local devices.

Despite their small size, these models offer the same capabilities as Large Language Models:

· Text generation

· Summarization

· Translation

· Question-answering

· Basic reasoning

The magic lies in their balance between capability and efficiency, offering enough intelligence to be useful while remaining small enough to run on your phone.

Why Run Language Models Locally on Android?

You might wonder: "Why bother running models on-device when cloud APIs are readily available?" The benefits are compelling:

1. Unmatched Privacy

By processing data entirely on the device, the user's sensitive information never leaves their mobile device. This is especially relevant for apps that handle personal data, private conversations, or confidential information, as the risk of leaks or unauthorized access to external servers is eliminated. Furthermore, this approach facilitates compliance with data protection regulations such as the GDPR, as control remains in the hands of the user.

2. Offline Functionality

Local model execution allows applications to run without an internet connection. This is critical for users in areas with limited coverage, during international travel, or in situations where connectivity is unstable or expensive. It also ensures that AI capabilities are always available, providing a continuous and reliable experience regardless of the network environment.

3. Reduced Costs

By avoiding the use of cloud APIs to process AI inferences, the costs associated with data traffic and cloud service usage fees are eliminated. This is especially beneficial for applications with a high volume of users or interactions, as local processing scales efficiently without increasing operating expenses. Furthermore, the need for backend infrastructure is reduced, simplifying application architecture and maintenance.

4. Leveraging Distributed Computing Capacity

Although running AI models locally may increase mobile device battery consumption compared to offloading processing to the cloud, this strategy has global benefits. By distributing the workload across millions of devices, it reduces reliance on large data centers, which can translate into lower operating costs and a significant reduction in centralized power consumption.

“LiteRT addresses five key limitations of ODML (On-Device Machine Learning): latency, privacy, connectivity, size, and power consumption,” Google’s documentation states.

The Google AI Edge Stack: Your Toolkit for On-Device AI

Google has built a comprehensive ecosystem for on-device machine learning through its AI Edge Stack. Two key components, MediaPipe and LiteRT, form the foundation for deploying SLMs (and other models) on Android.

MediaPipe: The Solutions Framework

MediaPipe provides ready-made solutions for common machine learning tasks with cross-platform compatibility. It includes:

· MediaPipe Tasks: Cross-platform APIs for deploying solutions including LLM inference.

· MediaPipe Models: Pre-trained, ready-to-run models for various tasks.

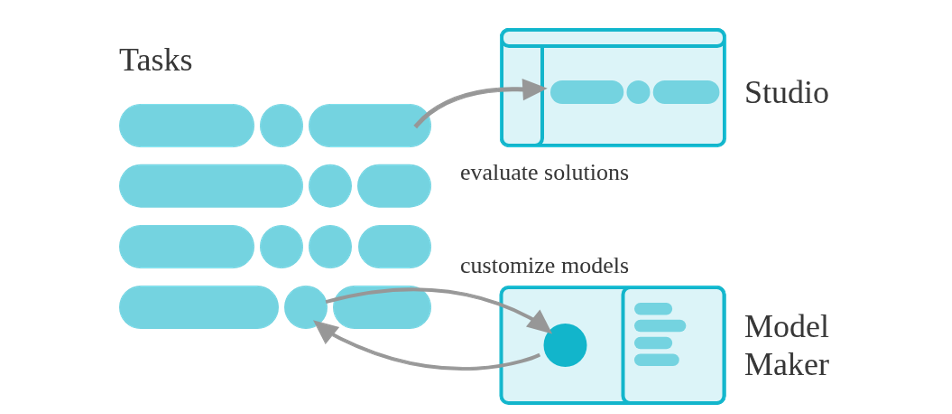

· MediaPipe Studio: Tools to visualize, evaluate, and benchmark solutions.

· MediaPipe Model Maker: Customization tools for adapting models to your data.

What Are Small Language Models?

Imagine having a miniaturized version of ChatGPT running entirely on your smartphone. That's what Small Language Models offer: compact language AI systems capable of operating within the limitations of mobile devices, yet powerful enough for sophisticated tasks.

Unlike much larger models like GPT-4 or Claude, which contain hundreds of billions of parameters, SLMs typically range from a few million to as many as 10 billion parameters. This reduced size makes them ideal candidates for running on local devices.

Despite their small size, these models offer the same capabilities as Large Language Models:

· Text generation

· Summarization

· Translation

· Question-answering

· Basic reasoning

The magic lies in their balance between capability and efficiency, offering enough intelligence to be useful while remaining small enough to run on your phone.

Why Run Language Models Locally on Android?

You might wonder: "Why bother running models on-device when cloud APIs are readily available?" The benefits are compelling:

1. Unmatched Privacy

By processing data entirely on the device, the user's sensitive information never leaves their mobile device. This is especially relevant for apps that handle personal data, private conversations, or confidential information, as the risk of leaks or unauthorized access to external servers is eliminated. Furthermore, this approach facilitates compliance with data protection regulations such as the GDPR, as control remains in the hands of the user.

2. Offline Functionality

Local model execution allows applications to run without an internet connection. This is critical for users in areas with limited coverage, during international travel, or in situations where connectivity is unstable or expensive. It also ensures that AI capabilities are always available, providing a continuous and reliable experience regardless of the network environment.

3. Reduced Costs

By avoiding the use of cloud APIs to process AI inferences, the costs associated with data traffic and cloud service usage fees are eliminated. This is especially beneficial for applications with a high volume of users or interactions, as local processing scales efficiently without increasing operating expenses. Furthermore, the need for backend infrastructure is reduced, simplifying application architecture and maintenance.

4. Leveraging Distributed Computing Capacity

Although running AI models locally may increase mobile device battery consumption compared to offloading processing to the cloud, this strategy has global benefits. By distributing the workload across millions of devices, it reduces reliance on large data centers, which can translate into lower operating costs and a significant reduction in centralized power consumption.

“LiteRT addresses five key limitations of ODML (On-Device Machine Learning): latency, privacy, connectivity, size, and power consumption,” Google’s documentation states.

The Google AI Edge Stack: Your Toolkit for On-Device AI

Google has built a comprehensive ecosystem for on-device machine learning through its AI Edge Stack. Two key components, MediaPipe and LiteRT, form the foundation for deploying SLMs (and other models) on Android.

MediaPipe: The Solutions Framework

MediaPipe provides ready-made solutions for common machine learning tasks with cross-platform compatibility. It includes:

· MediaPipe Tasks: Cross-platform APIs for deploying solutions including LLM inference.

· MediaPipe Models: Pre-trained, ready-to-run models for various tasks.

· MediaPipe Studio: Tools to visualize, evaluate, and benchmark solutions.

· MediaPipe Model Maker: Customization tools for adapting models to your data.

Chart 1

LiteRT: The Runtime Engine

LiteRT (formerly TensorFlow Lite) serves as Google's high-performance runtime for on-device AI. It provides the execution environment for your models with features like:

· Multi-platform support: Compatible with Android, iOS, embedded Linux, and microcontrollers

· Multi-framework model options: Convert models from TensorFlow, PyTorch, and JAX

· Hardware acceleration: Optimize performance through GPU and NPU delegates

· Diverse language support: SDKs for Java/Kotlin, Swift, Objective-C, C++, and Python

Adapting models to your use case

As we've mentioned, SLMs offer many possibilities, although they aren't the most powerful models. Sometimes it will be interesting to fine-tune these models to our specific use cases to improve their performance. One of the most common ways to accomplish this process is by using the LoRA fine-tuning technique.

Fine-tuning with LoRA (Low-Rank Adaptation)

Low-Rank Adaptation (LoRA) is an innovative technique that allows artificial intelligence models, such as Gemma 3, to be adapted to specific tasks without retraining all of their parameters. Instead of modifying the original model's billions of parameters, LoRA introduces two small, trainable matrices (A and B) that approximate the necessary changes using low-rank decomposition. This approach drastically reduces computational resources: for example, in GPT-3, it decreases the trainable parameters by 10,000 times and the required GPU memory by 66%. Furthermore, by keeping the original weights frozen, it preserves the model's general knowledge while it specializes in new tasks, avoiding what is known as "catastrophic forgetting."

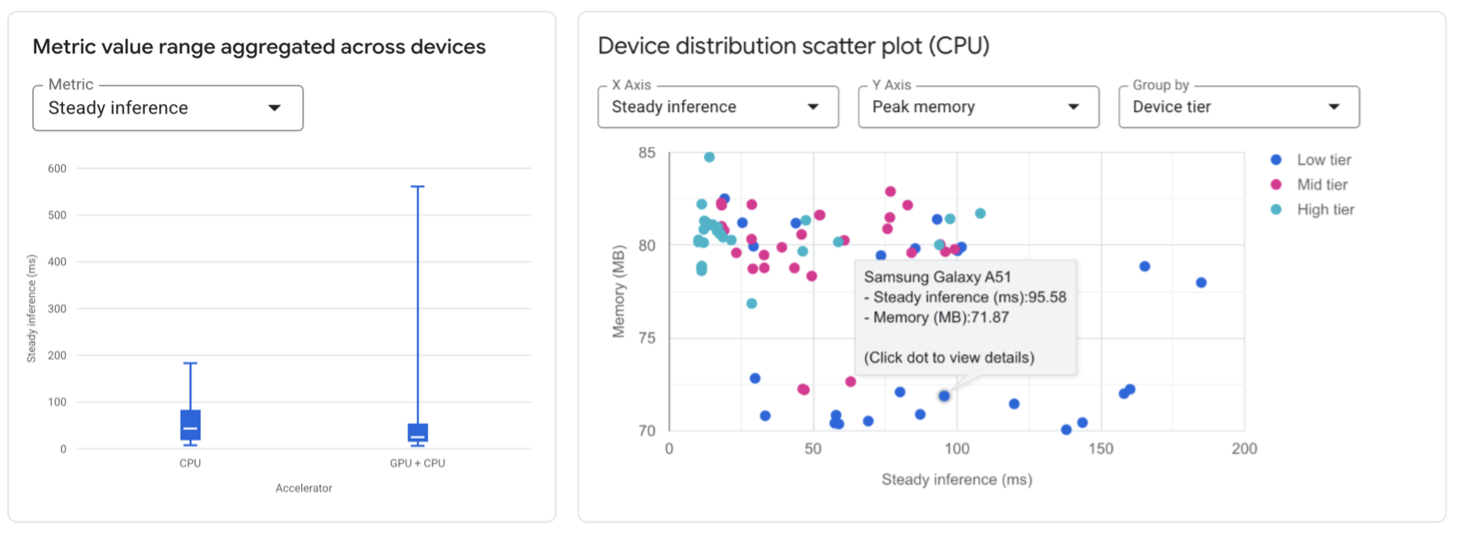

Testing Performance with AI Edge Portal

After customizing your model, it's key to test its performance on different Android devices. The new AI Edge Portal makes this easy. It's still in a private beta phase, which you can sign up for. In the future, it will allow you to:

· Test models across hundreds of physical device types.

· Identify hardware-specific performance variations.

· Compare different model configurations.

· Access detailed metrics including latency and memory usage.

LiteRT (formerly TensorFlow Lite) serves as Google's high-performance runtime for on-device AI. It provides the execution environment for your models with features like:

· Multi-platform support: Compatible with Android, iOS, embedded Linux, and microcontrollers

· Multi-framework model options: Convert models from TensorFlow, PyTorch, and JAX

· Hardware acceleration: Optimize performance through GPU and NPU delegates

· Diverse language support: SDKs for Java/Kotlin, Swift, Objective-C, C++, and Python

Adapting models to your use case

As we've mentioned, SLMs offer many possibilities, although they aren't the most powerful models. Sometimes it will be interesting to fine-tune these models to our specific use cases to improve their performance. One of the most common ways to accomplish this process is by using the LoRA fine-tuning technique.

Fine-tuning with LoRA (Low-Rank Adaptation)

Low-Rank Adaptation (LoRA) is an innovative technique that allows artificial intelligence models, such as Gemma 3, to be adapted to specific tasks without retraining all of their parameters. Instead of modifying the original model's billions of parameters, LoRA introduces two small, trainable matrices (A and B) that approximate the necessary changes using low-rank decomposition. This approach drastically reduces computational resources: for example, in GPT-3, it decreases the trainable parameters by 10,000 times and the required GPU memory by 66%. Furthermore, by keeping the original weights frozen, it preserves the model's general knowledge while it specializes in new tasks, avoiding what is known as "catastrophic forgetting."

Testing Performance with AI Edge Portal

After customizing your model, it's key to test its performance on different Android devices. The new AI Edge Portal makes this easy. It's still in a private beta phase, which you can sign up for. In the future, it will allow you to:

· Test models across hundreds of physical device types.

· Identify hardware-specific performance variations.

· Compare different model configurations.

· Access detailed metrics including latency and memory usage.

Chart 2

Optimizing Performance

To ensure smooth performance of SLMs on Android, consider these best practices:

1. Quantize your models: Convert from FP32 to INT8 to reduce size and improve speed.

2. Leverage hardware acceleration: Enable GPU or NPU delegates when available.

3. Limit sequence length: Cap generation length based on device capabilities.

4. Implement lazy loading: Load models only when needed.

5. Consider model size vs performance tradeoffs: Not every application needs the largest model.

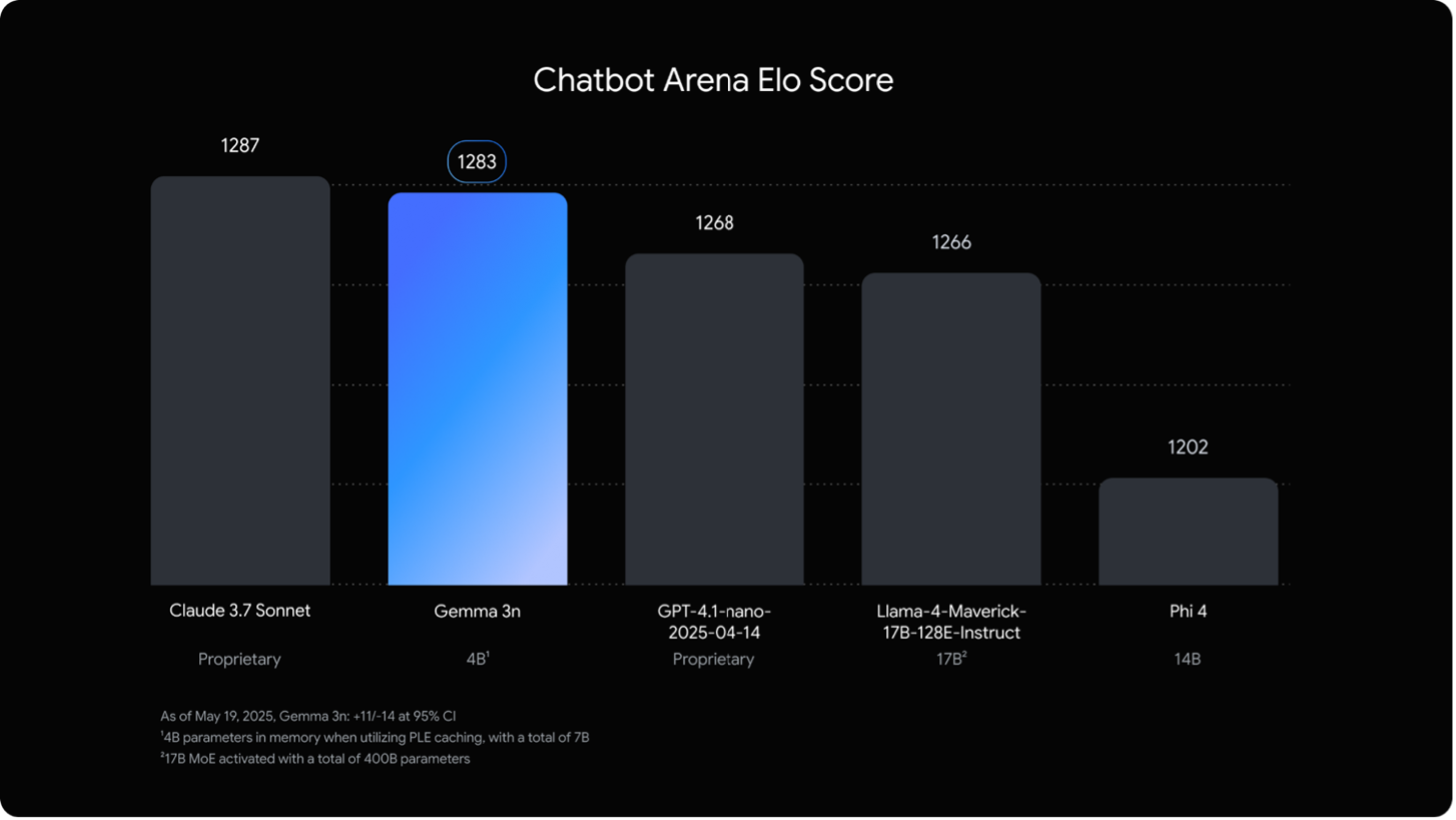

The Future of On-Device AI

The landscape of on-device language models is evolving rapidly. New developments such as the performance and multimodal capabilities of Google's new Gemma 3n model only fuel excitement around the world.

To ensure smooth performance of SLMs on Android, consider these best practices:

1. Quantize your models: Convert from FP32 to INT8 to reduce size and improve speed.

2. Leverage hardware acceleration: Enable GPU or NPU delegates when available.

3. Limit sequence length: Cap generation length based on device capabilities.

4. Implement lazy loading: Load models only when needed.

5. Consider model size vs performance tradeoffs: Not every application needs the largest model.

The Future of On-Device AI

The landscape of on-device language models is evolving rapidly. New developments such as the performance and multimodal capabilities of Google's new Gemma 3n model only fuel excitement around the world.

Chart 3

New RAG and Function Calling libraries for SLMs, which will be expanded, are aimed at increasingly sophisticated applications.

We will see advances in:

· Model efficiency and performance.

· Multimodal capabilities.

· Hardware acceleration for AI.

· Customization tools.

Conclusion

Small Language Models powered by Google AI Edge represent a major leap forward in bringing AI directly to Android devices. By eliminating the need for cloud connectivity, these technologies enable faster, more private, and efficient applications.

Whether you're building a content generation tool, an intelligent assistant, or a translation app, the combination of MediaPipe and LiteRT provides a robust foundation for implementing SLMs that run entirely on-device.

The future of AI isn't just in the cloud: it's in your pocket, running locally on your Android device.

Are you ready to start experimenting? Head over to the LiteRT Hugging Face Community to explore available models, visit the repository google-ai-edge/gallery and begin building your own on-device AI experiences!

References and links of interest

1. https://www.ibm.com/think/topics/small-language-models

2. https://huggingface.co/blog/jjokah/small-language-model

3. https://ai.google.dev/edge/litert

4. https://ai.google.dev/edge/mediapipe

5. https://ai.google.dev/gemma/docs/core/lora_tuning

6. https://github.com/google-ai-edge/gallery

We will see advances in:

· Model efficiency and performance.

· Multimodal capabilities.

· Hardware acceleration for AI.

· Customization tools.

Conclusion

Small Language Models powered by Google AI Edge represent a major leap forward in bringing AI directly to Android devices. By eliminating the need for cloud connectivity, these technologies enable faster, more private, and efficient applications.

Whether you're building a content generation tool, an intelligent assistant, or a translation app, the combination of MediaPipe and LiteRT provides a robust foundation for implementing SLMs that run entirely on-device.

The future of AI isn't just in the cloud: it's in your pocket, running locally on your Android device.

Are you ready to start experimenting? Head over to the LiteRT Hugging Face Community to explore available models, visit the repository google-ai-edge/gallery and begin building your own on-device AI experiences!

References and links of interest

1. https://www.ibm.com/think/topics/small-language-models

2. https://huggingface.co/blog/jjokah/small-language-model

3. https://ai.google.dev/edge/litert

4. https://ai.google.dev/edge/mediapipe

5. https://ai.google.dev/gemma/docs/core/lora_tuning

6. https://github.com/google-ai-edge/gallery